yProv4ML

This library is part of the yProv suite, and provides a unified interface for logging and tracking provenance information in machine learning experiments, both on distributed as well as large scale experiments.

It allows users to create provenance graphs from the logged information, and save all metrics and parameters to json format.

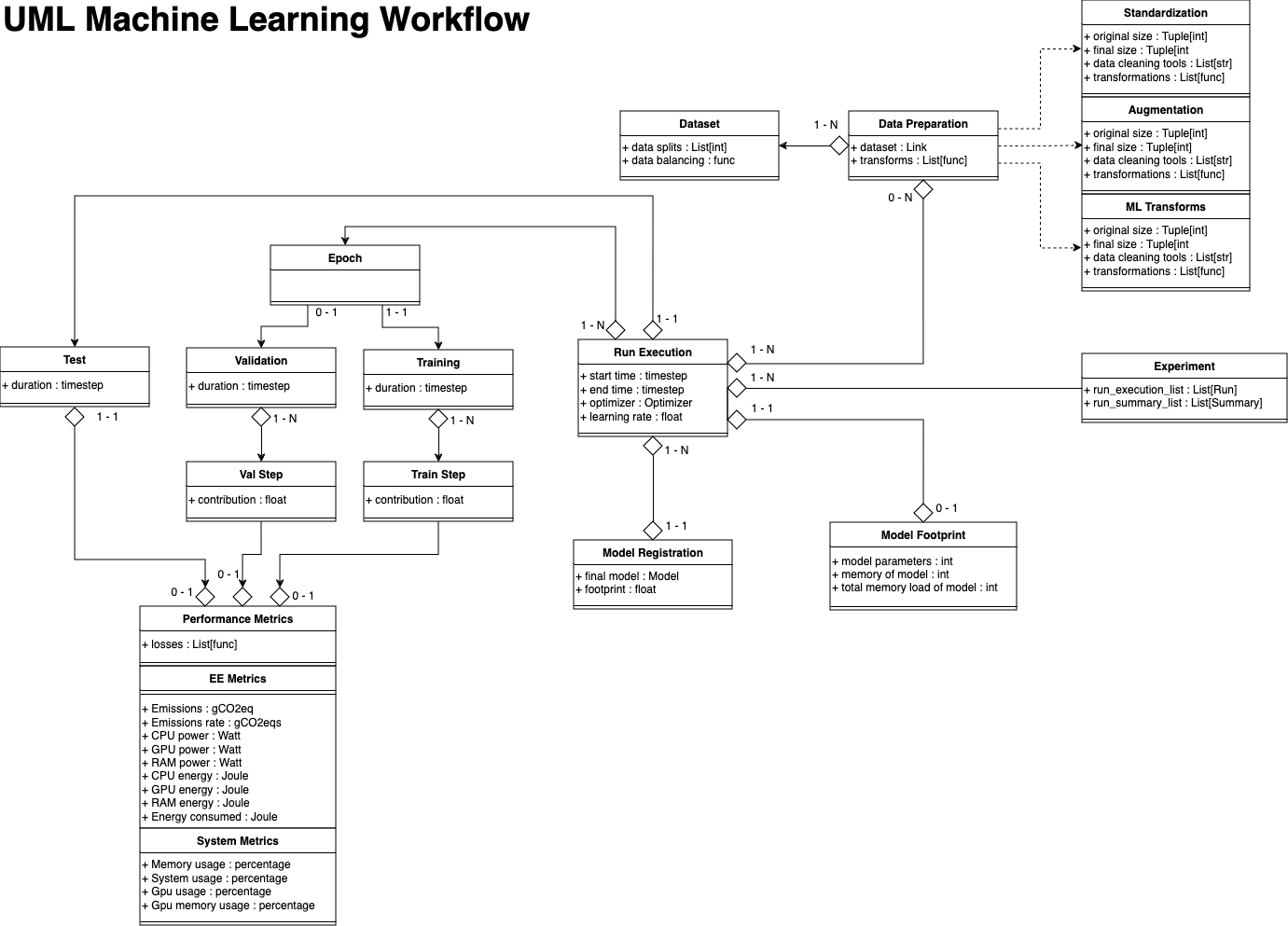

Data Model

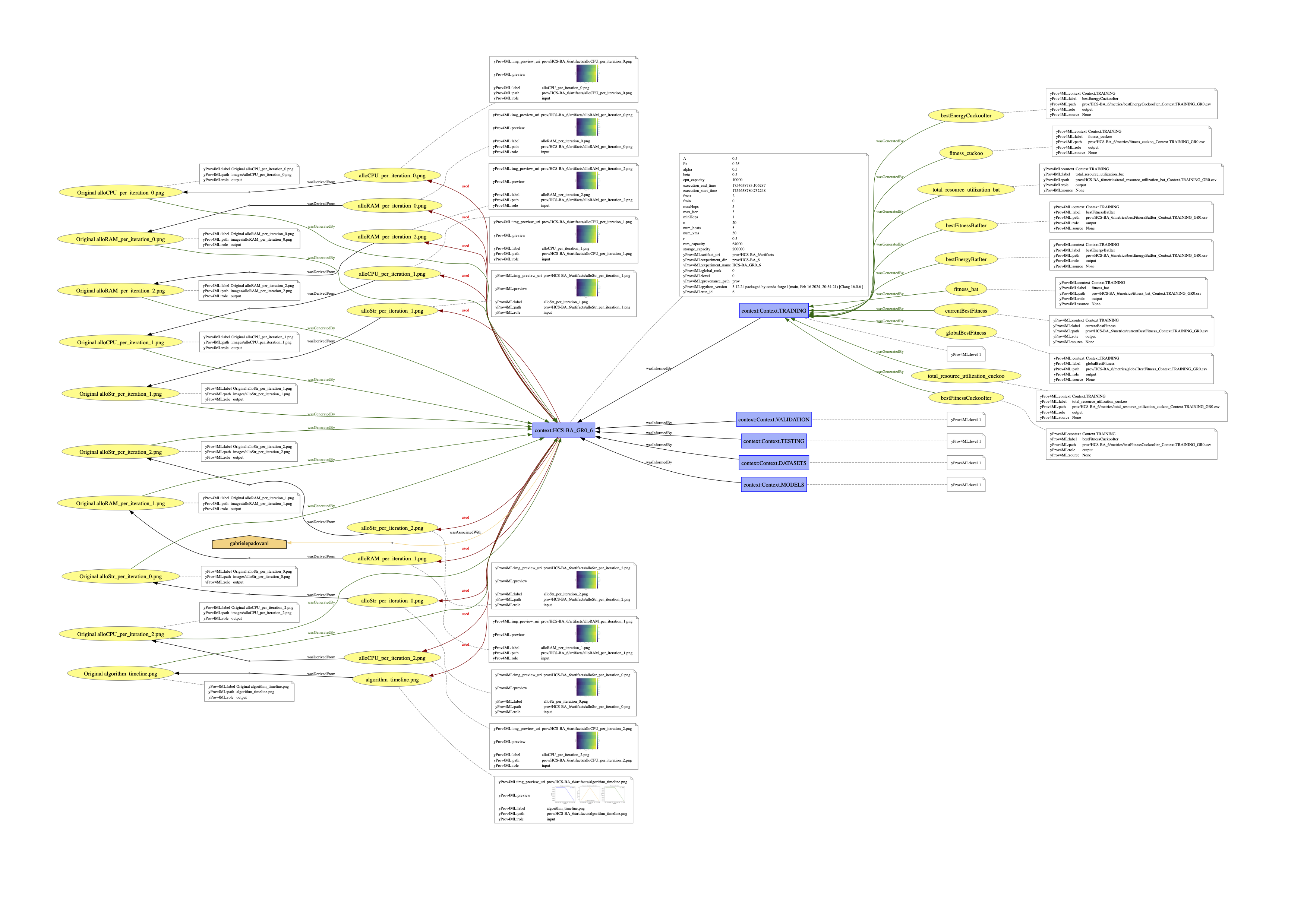

Example

The image shown above has been generated through one of the yProv4ML use cases. Several examples are present in the examples section and provided in the example directory on GitHub.

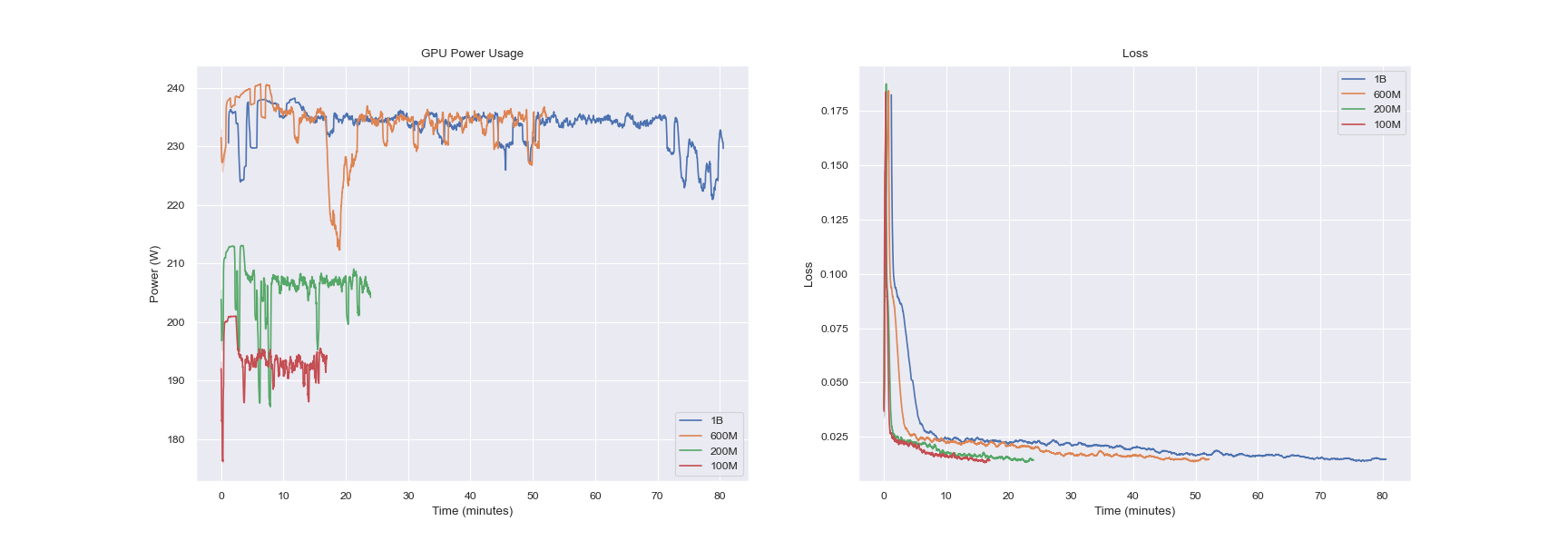

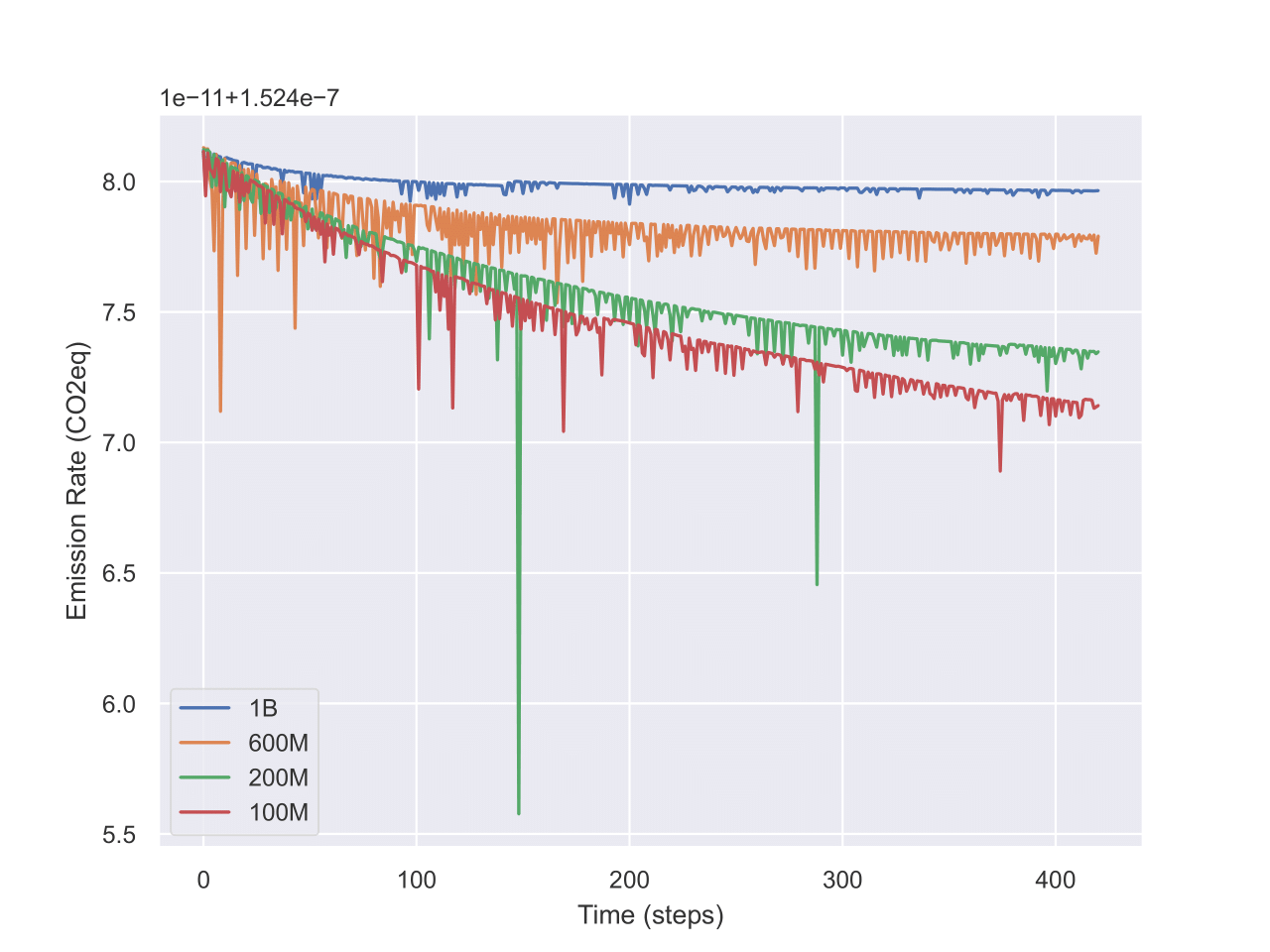

Metrics Visualization

Experiments and Runs

An experiment is a collection of runs where each run is a single execution of a python script.

By changing the experiment_name parameter in the start_run function, the user can create a new experiment.

All artifacts and metrics logged during the execution of the experiment will be saved in the directory specified by the experiment ID. Additionally, a global rank (GR) parameter is tracked, to enable the library to save data in multi-process environments.

Several runs can be executed in the same experiment. All runs will be saved in the same directory (according to the specific experiment name and ID).